A look at evolving benchmarks for pediatric cardiac reference data

I read with some interest the article Optimal Normative Pediatric Cardiac Structure Dimensions for Clinical Use and was amused to see an off-hand reference to Parameter(z):

Pettersen et al’s paper does not specify which BSA formula was utilized; however, the cardiac dimensions they measured have been normalized using both the DuBois and the Haycock et al formula and are available elsewhere.

I am always curious about the context in which Parameter(z) might appear, and in this case I’d like to add my own two cents.

The authors of the paper set out to review the literature and recommend the “optimal normative data set” for cardiac structures/dimensions-- a familiar, if not worthy, cause. Along the way the authors note several criteria (I am going to call them benchmarks) for consideration:

- sample size—larger studies are better

- normalization factor

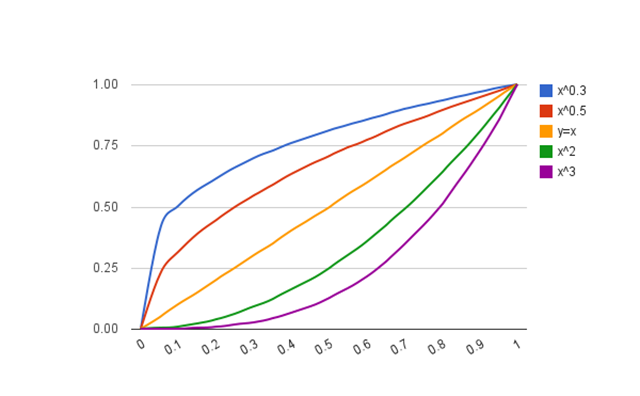

- allometric equation is best

- Haycock BSA equation is best

- measurement technique/protocol—those according to current guidelines are best

- consideration for race and gender

- “sophisticated” analysis (like the LMS method) are mentioned (preferred?)

Race and gender considerations are mentioned, but it is not clear if these were separate criteria, or if they only had a bearing on which BSA formula to use. To give them the benefit of the doubt, I will leave them in as bonus benchmarks. The authors then go on to recommend the data from Detroit as the “optimal” data set.

While I don’t disagree with any of these benchmarks, I do think some clarification might be useful. An allometric equation is certainly a useful approach for describing the growth of structures-- the biologic relationship between structure and body size-- and I am a big fan of this approach. A “sophisticated” analysis like the LMS method is a completely different approach, independent and ignorant of any underlying biologic process. I am also a big fan of this type of analysis. The message here is that you either do a predictive analysis, preferably using an allometric equation OR you do a descriptive analysis, preferably using the LMS method.

The principle feature of the LMS method is that it accounts for things like skew and heteroscedasticity and results in valid, normally distributed z-scores. There are other ways to achieve this though, as was recently present in the manuscript New equations and a critical appraisal of coronary artery Z scores in healthy children. Here, the authors apply the Anderson-Darling goodness-of-fit test to determine if their data (derived from an allometric equation) depart from a normal distribution.

So, the first point is this—one of the benchmarks should read: equations result in valid, normally distributed data, either by use of the LMS (or similar) method, or by performing some type of analysis confirming a normal distribution.

The second point is this: I don’t think the Detroit data holds up well to these benchmarks and should not be described as “optimal”. Certainly, theirs is a large study (>700 patients), and they indeed followed current guidelines for the measurements. However, on every other point I believe they fail:

- they do not use an allometric equation (theirs is a polynomial equation)

- they do not use the preferred BSA equation (via a personal communication, I learned they used DuBois & DuBois)

- they do not include race or gender in their analysis (in fact, no demographic data is presented—at all)

- they did not use the LMS method, or perform any distribution analysis

Are they better than nothing? Absolutely.

A step in the right direction? Agreed.

But optimal?

I will say this about the Detroit z-score calculator though: it is the most popular of all the calculators at Parameter(z). In the past 6 months:

- 19,165 pageviews; 15.26% of all site traffic

- average 160 visits per day

- average 3:56 time on page

- visited most by users in California, Virginia, Georgia, Chile, and North Carolina

The equations may not be optimal, but—for better or worse—they are getting a lot of use.