examining CMR references for LVEDV reveals interesting differences; doubt is cast upon the practice of generating z-scores for indexed values

I have been tinkering with z-scores for cardiac MRI and I thought it might be interesting to compare a couple of references for LV end-diastolic volume (I always think this stuff is interesting):

So, what I did was create some tables (using the mean and ± 2SD limits), generated some charts, and then made a series of z-score calculations over a range of LVEDV values for two hypothetical patients (view the spreadsheet and calculations for this data HERE).

Data:

First, the Alfakih data: based on their published values for “younger men” using SSFP, the LVEDVi is 87.6 ± 15.6.

| BSA (m2) | ULN (ml) | Mean (ml) | LLN (ml) |

|---|---|---|---|

| 0.5 | 59 | 44 | 29 |

| 0.6 | 71 | 53 | 34 |

| 0.7 | 83 | 61 | 40 |

| 0.8 | 95 | 70 | 46 |

| 0.9 | 106 | 79 | 51 |

| 1.0 | 118 | 88 | 57 |

| 1.1 | 130 | 96 | 63 |

| 1.2 | 142 | 105 | 68 |

| 1.3 | 154 | 114 | 74 |

| 1.4 | 166 | 123 | 80 |

| 1.5 | 177 | 131 | 86 |

| 1.6 | 189 | 140 | 91 |

| 1.7 | 201 | 149 | 97 |

| 1.8 | 213 | 158 | 103 |

| 1.9 | 225 | 166 | 108 |

| 2.0 | 236 | 175 | 114 |

And then the Buechel data: based on their allometric equation, a * BSAb, and their published values for boys: a = 77.5, b = 1.38, and using the z-score form of

... and their published value for the “SD” = 0.0426

| BSA (m2) | ULN (ml) | Mean (ml) | LLN (ml) |

|---|---|---|---|

| 0.5 | 36 | 30 | 25 |

| 0.6 | 47 | 38 | 32 |

| 0.7 | 58 | 47 | 39 |

| 0.8 | 69 | 57 | 47 |

| 0.9 | 82 | 67 | 55 |

| 1.0 | 94 | 78 | 64 |

| 1.1 | 108 | 88 | 73 |

| 1.2 | 121 | 100 | 82 |

| 1.3 | 135 | 111 | 92 |

| 1.4 | 150 | 123 | 101 |

| 1.5 | 165 | 136 | 111 |

| 1.6 | 180 | 148 | 121 |

| 1.7 | 196 | 161 | 132 |

| 1.8 | 212 | 174 | 143 |

| 1.9 | 229 | 188 | 155 |

| 2.0 | 245 | 201 | 166 |

Charts:

Z-Scores:

| LVEDV | Z: Alfakih | Z: Buechel |

|---|---|---|

| 15 | -4.3 | -11.7 |

| 20 | -3.9 | -8.8 |

| 25 | -3.4 | -6.5 |

| 30 | -2.9 | -4.7 |

| 35 | -2.5 | -3.1 |

| 40 | -2 | -1.7 |

| 45 | -1.5 | -0.5 |

| 50 | -1.1 | 0.6 |

| 55 | -0.6 | 1.5 |

| 60 | -0.1 | 2.4 |

| 65 | 0.3 | 3.2 |

| 70 | 0.8 | 4 |

| 75 | 1.3 | 4.7 |

| 80 | 1.7 | 5.3 |

| 85 | 2.2 | 6 |

| 90 | 2.7 | 6.5 |

| LVEDV | Z: Alfakih | Z: Buechel |

|---|---|---|

| 50 | -3.4 | -9.2 |

| 60 | -2.9 | -7.3 |

| 70 | -2.5 | -5.8 |

| 80 | -2.0 | -4.4 |

| 90 | -1.5 | -3.2 |

| 100 | -1.1 | -2.1 |

| 110 | -0.6 | -1.2 |

| 120 | -0.1 | -0.3 |

| 130 | 0.3 | 0.5 |

| 140 | 0.8 | 1.3 |

| 150 | 1.3 | 2.0 |

| 160 | 1.7 | 2.7 |

| 170 | 2.2 | 3.3 |

| 180 | 2.7 | 3.9 |

| 190 | 3.1 | 4.4 |

| 200 | 3.6 | 4.9 |

Summary

Buechel et al. sum it up nicely in their discussion:

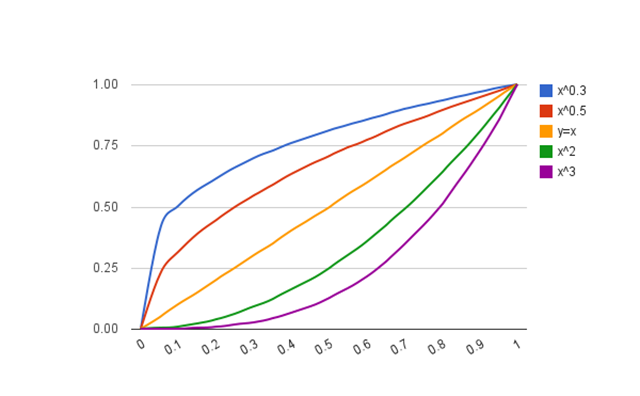

cardiac volumes have a non-linear relation to body surface area, and since the exponential values are different for different cardiac parameters, it would not be appropriate to provide normal values simply indexed to BSA

The textbook Echocardiography in Pediatric and Congenital Heart Disease has an excellent and thorough description of the practice of “indexing”. Essentially, the problem boils down to this: for LVEDV, none of the assumptions for the relationship are met:

In order for the per-BSA method of indexing to work, three assumptions must be met. The relationship to BSA must be linear, the intercept of the regression must be zero, and the variance must be constant over the range of BSA.

If you had to choose a reference for LVEDV in children measured with cardiac MRI, I would have to wonder why anyone would not use the data from Buechel et al.— unless they just did not have those calculations handy.

Well, now they do: