Boston Children’s versus Children’s National Medical Center for a “giant” knockout

When the Children’s National (CNMC) coronary artery z-score equations were published in 2008, I briefly compared them to the 2007 Boston data and noted their similarities. In my opinion, the manner in which the CNMC equations handle the “standard deviation” makes these incompatible with some newly proposed cutoffs. Let me explain.

Classifying Aneurysms

The current AHA criteria for classifying coronary artery aneurysms relies on a combination of z-scores and absolute diameters:

- any segment with a z-score of > 2.5 = abnormal

- <5 mm = small

- 5 – 8 mm = large

- ≥8 mm = giant

A recent article by Manlhoit et al. points out the folly of using absolute measurements in this instance. They then take the logical next step by introducing a classification system based on z-scores. Using their previously published data (from Boston, see above), the authors advocate the following coronary artery aneurysm z-score classification:

- ≥2.5 – <5 = small

- ≥5 – <10 = large

- ≥10 = giant

The clinical science behind establishing these cutoff points is presented in the article and is beyond the scope of what I am trying to do here. However, it is worth noting that (at least to me) the proposed system has a certain elegance and symmetry— it just seems reasonable.

Z-Score Equations

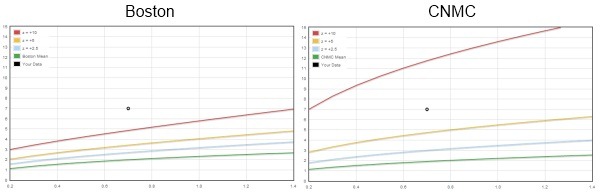

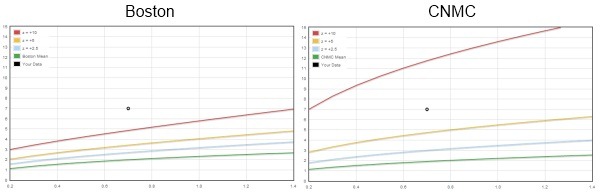

The Boston and CNMC equations each predict very similar values for the BSA adjusted mean diameter by using an allometric model. The two equations also yield similar results out to about z-scores of +2. But the similarities end where coronary artery abnormalities begin. Using the new criteria proposed for giant aneurysms ( z ≥10 ) and applying the CNMC equations, patients who previously had giant aneurysms ( ≥8 mm) now only have large aneurysms.

(See this page for the interactive comparison)

The big difference between the two z-score equations (z = [score – mean] / standard deviation ) is in how they deal with the standard deviation. The Boston equations use a separate regression (on BSA) to predict the SD, while the CNMC equations use the regression mean square error (MSE) statistic as a substitute for the SD.

Residuals

I have always been bothered by the patent substitution of the regression MSE (usually, the square root of the MSE i.e., the RMSE) for the population SD— particularly for the purpose of calculating z-scores. While the “transform both sides” strategy is perfectly legitimate for stabilizing the variance (and indeed, for discovering the allometric relationship!), if you play around with the regression residuals and then back-transform (i.e., exponentiate) your calculations — you have just modeled positive skew.

Detecting skew shouldn’t be all that hard to do. If the values are distributed normally, then it stands to reason that the residuals (observed - predicted) are also normally distributed. A simple plot of the residuals should show us what is going on. Here is the frequency vs. residuals plot from the recent fetal echo reference values of Lee et al.:

(Similar residuals plots are provided by the crazy-cool online curve fitting at ZunZun.com.)

That’s not to say that skew doesn’t exist. Indeed, that is part of the point and elegance of the recently applied LMS method. It is imperative that we do something to examine the presence or absence of skew, and then describe how we intended to deal with it. Unfortunately, both of these investigations fail to mention this fundamental data characteristic in their respective manuscripts.

Bottom Line

Due to unexplored assumptions about the nature of the residuals/skew of the data, z-score cutoff values are not universal and are absolutely dependent upon their underlying reference z-score equations.